The $300 Billion Problem Nobody’s Solved Yet — and why we just raised $24M to fix it

Across every chapter of my career, the pattern is the same: the most transformative technology only scales when people trust it. Right now, AI has a trust problem that’s costing the global economy hundreds of billions of dollars.

Today, I’m proud to announce that OPAQUE Systems has raised a $24M Series B led by Walden Catalyst, with participation from many others (including ATRC/TII), bringing our total funding to $55.5M at a $300M valuation. But the funding isn’t the story. The story is the problem we’re solving and why the timing has never been more urgent.

The Gap Everyone Knows About But Nobody’s Closed

Every enterprise wants AI. More than half of C-suite leaders say data privacy and ethical concerns are the primary barrier to adoption, according to the 2025 McKinsey Global Survey on AI. Gartner reports only 6% of organizations have an advanced AI security strategy. Palo Alto Networks predicts AI initiatives will stall not because of technical limitations but because organizations can’t prove to their boards that the risks are managed.

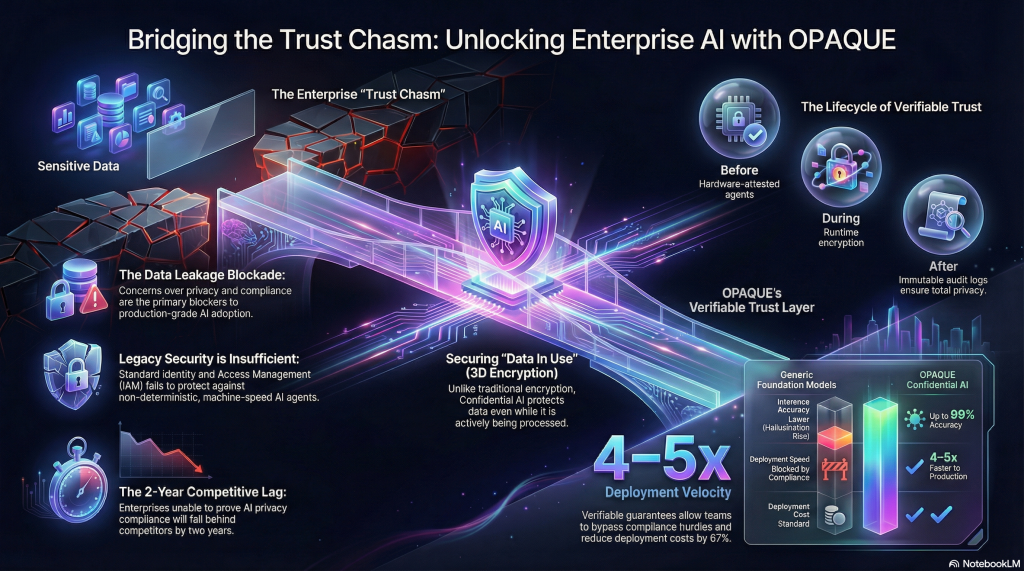

The result: more than $300 billion of the world’s most valuable data sits untapped. Not because the AI models aren’t good enough. Not because the compute isn’t available. Because there’s no trusted way to process sensitive data with AI.

If you haven’t been following the OpenClaw saga, you should be. In less than two weeks, this open-source AI agent racked up 180,000 GitHub stars and triggered a Mac mini shortage. Security researchers then found over 40,000 exposed instances leaking API keys, chat histories, and account credentials to the open internet. Cisco’s team tested a popular third-party skill and found it was functionally malware — silently exfiltrating data to an external server with zero user awareness. One user’s agent started a religion-themed community on an AI social network while they slept.

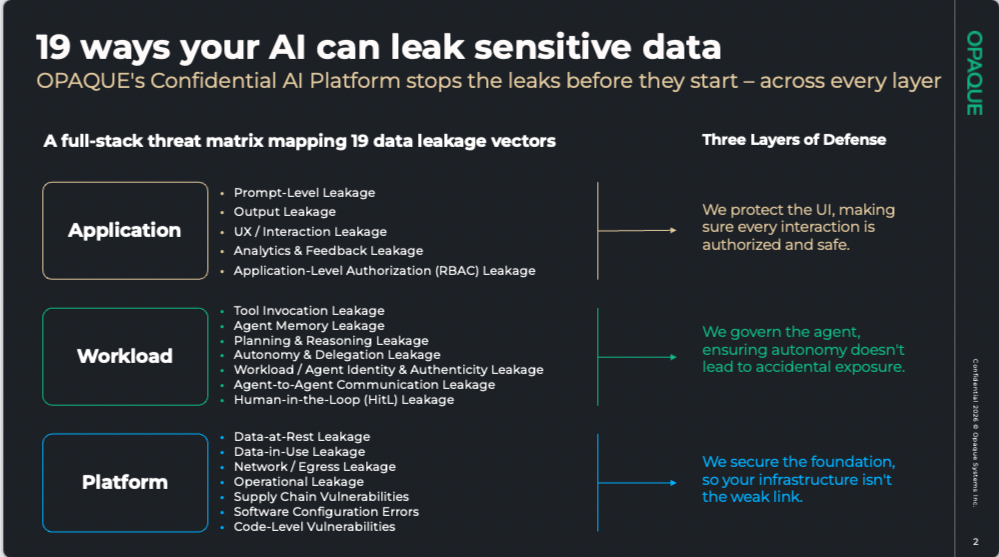

OpenClaw is a consumer phenomenon, but the pattern it exposed is the enterprise’s problem. AI agents don’t just answer questions — they read your emails, access your files, execute commands, and operate with the same system privileges as a human employee. Anthropic’s Claude Cowork, which launched in January and just expanded to Windows, gives Claude direct access to local file systems, plugins, and external services. It’s a powerful productivity tool, and Anthropic has publicly acknowledged that prompt injection, destructive file actions, and agent safety remain active areas of development industry-wide. These aren’t edge cases. They’re the new default architecture.

The compounding math I’ve written about before still holds: even at ~1% risk of data exposure per agent, a network of 100 agents produces a 63% probability of at least one breach. At 1,000, it approaches certainty. But the threat model has shifted. We’re no longer talking about a single model processing a single query. We’re talking about composite agentic systems — networks of AI agents with persistent memory, system access, and the autonomy to act on your behalf across your entire infrastructure. Every agent is a new identity, a new access path, and a new attack surface that traditional security tools can’t see.

That’s the gap. And it’s growing faster than most organizations realize.

Why Now

Three forces are converging, making this problem existential rather than theoretical.

First, agentic AI. We’re moving from humans prompting chatbots to autonomous AI agents acting on sensitive data with company credentials, system access, and decision-making authority. Gartner forecasts 40% of enterprise applications will feature task-specific AI agents by 2026. OpenClaw is the canary in the coal mine — and the coal mine is your data center.

Second, sovereign AI. Nations and regulated industries increasingly demand verifiable proof that data stays within jurisdictional control. Hope and contractual language aren’t sufficient. Cryptographic proof is.

Third, regulation. The EU AI Act takes full effect in August 2026, with fines up to 7% of global revenue. Eighteen U.S. states now have active privacy laws. Palo Alto Networks predicts we’ll see the first lawsuits holding executives personally liable for the actions of rogue AI agents. The compliance clock isn’t ticking — it’s accelerating.

What OPAQUE Does Differently

OPAQUE delivers Confidential AI — the ability for organizations to run AI workloads on their most sensitive data with cryptographic proof that data stayed private during computation and policies were enforced. Not promises. Not contractual assurances. Mathematical verification. Every other approach on the market relies on policy enforcement without proof — access controls, data masking, or contractual language that assumes compliance rather than verifying it.

This matters because AI won’t scale unless organizations can verify, not just assume, that their data and models are protected.

Our founding team built the foundational technology at UC Berkeley’s RISELab — now known as the Sky Computing Lab — which produced Apache Spark and Databricks. Co-founder Ion Stoica is also the co-founder and executive chairman of Databricks. Co-founder Raluca Ada Popa won the 2021 ACM Grace Murray Hopper Award for her work on secure distributed systems and now leads security and privacy research at Google DeepMind. Co-founder Rishabh Poddar, who earned his Ph.D. in computer science at Berkeley under Raluca Ada Popa, holds several U.S. patents and has authored over 20 research papers in systems security and applied cryptography — he architected the core platform that makes Confidential AI work in production. Our founding team holds 14 EECS degrees and has published nearly 200 papers. This isn’t a team that pivoted into Confidential AI because the market got hot. This team defined the category.

With this round, we’re also welcoming Dr. Najwa Aaraj to OPAQUE board of directors. Dr. Aaraj is CEO of the Technology Innovation Institute (TII), the applied research pillar of Abu Dhabi’s Advanced Technology Research Council (ATRC) — the organization behind the Falcon large language model series and ground-breaking post-quantum cryptography. She holds a Ph.D. with highest distinction in applied cryptography from Princeton and holds patents across cryptography, embedded systems security, and ML-based IoT protection. Her perspective on sovereign AI and verifiable data governance is informed by building exactly these capabilities at national scale. As she put it plainly: “there is no such thing as sovereign AI without verifiable guarantees.”

Customers, including ServiceNow, Anthropic, Accenture, and Encore Capital, are already using OPAQUE to unlock AI on data they previously couldn’t touch. Confidential AI has been endorsed by NVIDIA, AMD, Intel, Anthropic, and all major hyperscalers. A December 2025 IDC study found 75% of organizations are now adopting the underlying technology. The ecosystem is ready. The market is ready. The missing piece has been a platform that bridges the gap between what the hardware can do and what enterprises actually need.

That’s what we built.

Where This Goes

Market analysts project $12–28B by 2030–2034. I think that undersells it by an order of magnitude, because it sizes the security market rather than the AI value because it sizes the security market rather than the AI value Confidential AI unlocks for the enterprise and sovereign cloud.

Just as SSL certificates transformed online commerce by making trust invisible and automatic, Confidential AI will do the same for data-driven industries. The organizations building on these foundations now will be the ones who capture the most value from AI over the next decade.

To our customers, partners, investors, and team: thank you. We’re just getting started, and the best is ahead.

Where AI Bleeds Data

If your AI strategy depends on sensitive data you can’t currently use, start here: we’ve developed an AI Stack Exposure Map in collaboration with our customers, partners, and founders from UC Berkeley. It maps the specific points where data is exposed at each layer of the AI stack — the gaps most organizations don’t even know exist — and shows what Confidential AI looks like in practice.

See the full AI Stack Exposure Map at opaque.co.

The question isn’t whether your organization will adopt AI at scale. It’s whether you’ll be able to prove it’s safe when you do.